Fashion++: Minimal Edits for Outfit Improvement

Wei-Lin Hsiao

Isay Katsman*

Chao-Yuan Wu*

Devi Parikh

Kristen Grauman

UT Austin

Cornell Tech

Georgia Tech

Facebook AI Research (FAIR)

In ICCV 2019

Minimal outfit edits suggest minor changes to an existing outfit in order to improve its fashionability. For example, changes might entail (left) removing an accessory; (middle) changing to a blouse with higher neckline; (right) tucking in a shirt. Our model consists of a deep image generation neural network that learns to synthesize clothing conditioned on learned per-garment encodings. The latent encodings are explicitly factorized according to shape and texture, thereby allowing direct edits for both fit/presentation and color/patterns/material, respectively. We show how to bootstrap Web photos to automatically train a fashionability model, and develop an activation maximization-style approach to transform the input image into its more fashionable self. Experiments demonstrate that Fashion++ provides successful edits, both according to automated metrics and human opinion.

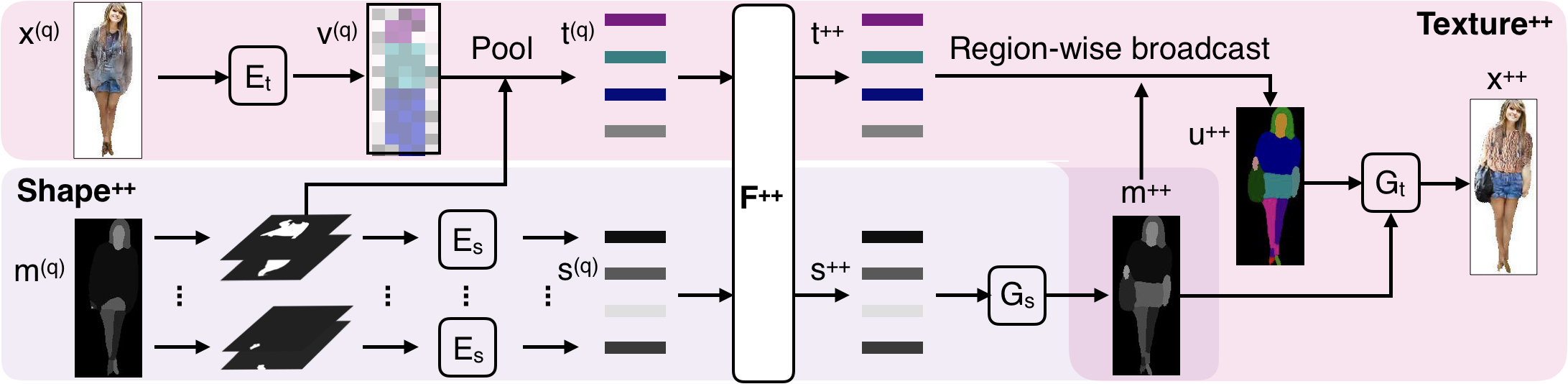

Fashion++ Framework

We first obtain latent features from texture and shape encoders Et and Es. Our editing module F++ operates on the latent texture feature t and shape feature s. After an edit, the shape generator Gs first decodes the updated shape feature s++ back to a 2D segmentation mask m++, and then we use it to region-wise broadcast the updated texture feature t++ into a 2D feature map u++. This feature map and the updated segmentation mask are passed to the texture generator Gt to generate the final updated outfit x++.

Demo Video

Fashion++ Example Suggestions

Fashion++ offers a spectrum of edits for each outfit, from which users can choose their desired version: from changing less to improving fashionability more.