|

Changan Chen*1,4,

Unnat Jain*2,4,

Carl Schissler3,

Sebastia V. Amengual Gari3,

Ziad Al-Halah1, Vamsi Krishna Ithapu3, Philip Robinson3, Kristen Grauman1,4 |

|

1UT Austin,2UIUC,3Facebook Reality Labs,4Facebook AI Research Accepted at ECCV 2020 (Spotlight) |

|

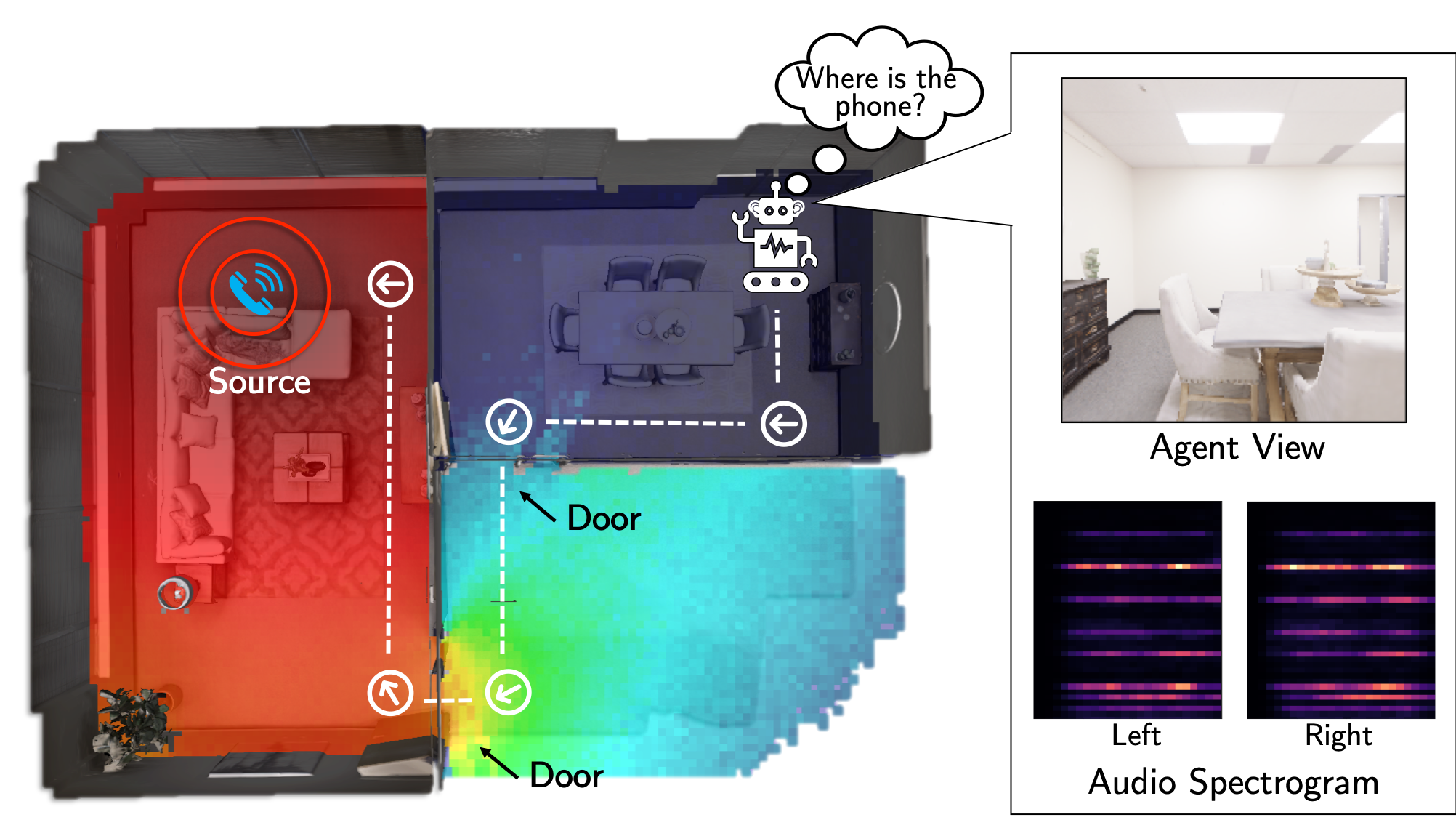

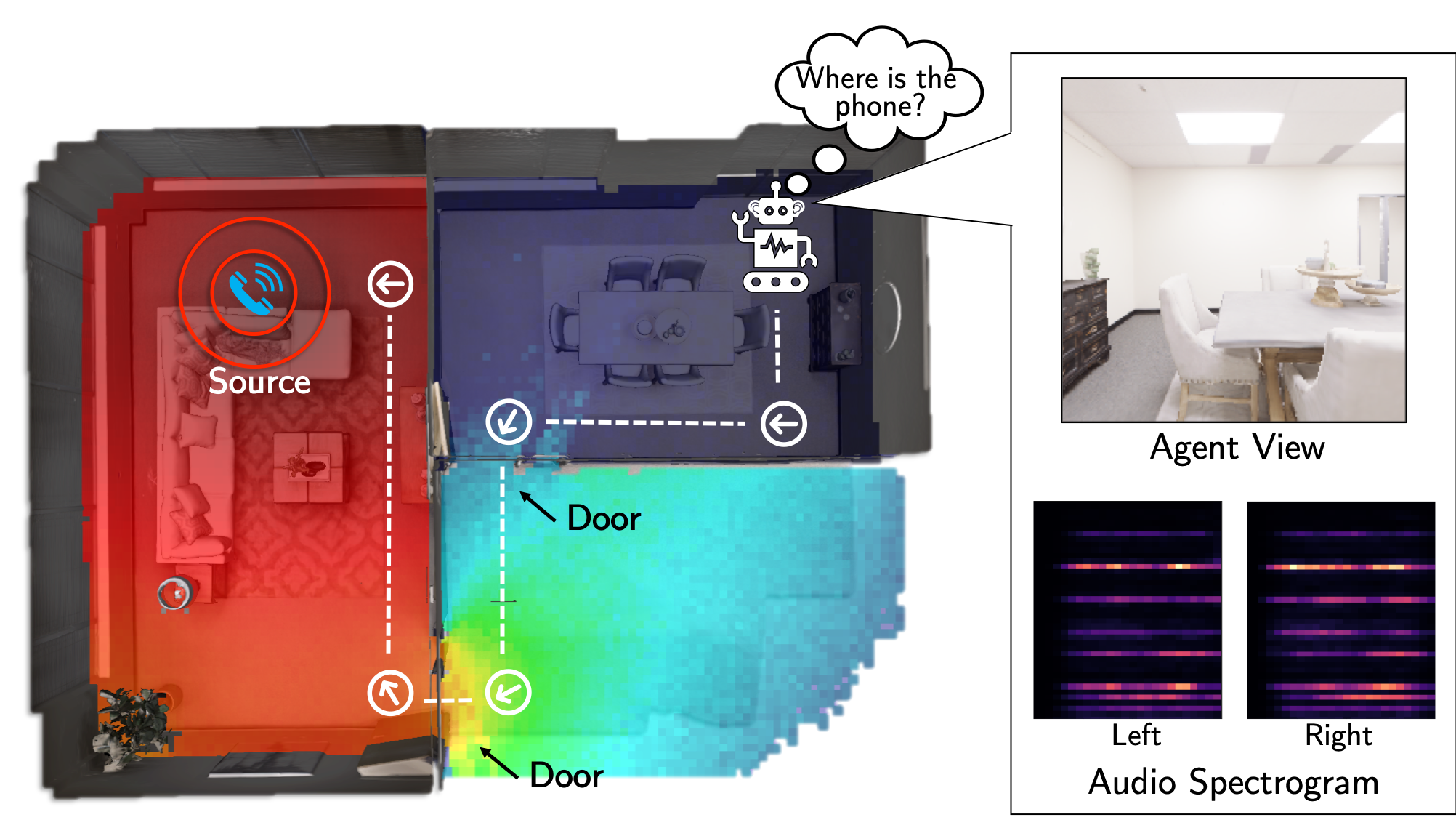

| Moving around in the world is naturally a multisensory experience, but today's embodied agents are deaf---restricted to solely their visual perception of the environment. We introduce audio-visual navigation for complex, acoustically and visually realistic 3D environments. By both seeing and hearing, the agent must learn to navigate to a sounding object. We propose a multi-modal deep reinforcement learning approach to train navigation policies end-to-end from a stream of egocentric audio-visual observations, allowing the agent to (1) discover elements of the geometry of the physical space indicated by the reverberating audio and (2) detect and follow sound-emitting targets. We further introduce SoundSpaces: a first-of-its-kind dataset of audio renderings based on geometrical acoustic simulations for two sets of publicly available 3D environments (Matterport3D and Replica), and we instrument Habitat to support the new sensor, making it possible to insert arbitrary sound sources in an array of real-world scanned environments. Our results show that audio greatly benefits embodied visual navigation in 3D spaces, and our work lays groundwork for new research in embodied AI with audio-visual perception. |

|

A quick overview (2 min) video, corresponding slides: [Teaser slides].

|

|

|

|

A detailed (10 min) video, corresponding slides: [Detailed slides]. |

|

|

| (1) Changan Chen*, Unnat Jain*, Carl Schissler, Sebastia Vicenc Amengual Gari, Ziad Al-Halah, Vamsi Krishna Ithapu, Philip Robinson, Kristen Grauman. SoundSpaces: Audio-Visual Navigation in 3D Environments. In ECCV 2020 [Bibtex] |

| (2) Ruohan Gao, Changan Chen, Carl Schissler, Ziad Al-Halah, Kristen Grauman. VisualEchoes: Spatial Image Representation Learning through Echolocation. In ECCV 2020 [Bibtex] |

| (3) Changan Chen, Sagnik Majumder, Ziad Al-Halah, Ruohan Gao, Santhosh Kumar Ramakrishnan, Kristen Grauman. Audio-Visual Waypoints for Navigation. arXiv 2020 [Bibtex] |

| UT Austin is supported in part by DARPA Lifelong Learning Machines. We thank Alexander Schwing, Dhruv Batra, Erik Wijmans, Oleksandr Maksymets, Ruohan Gao, and Svetlana Lazebnik for valuable discussions and support with the AI-Habitat platform. |

| Copyright © 2020 University of Texas at Austin |