Sharing Features Between Objects

and Their Attributes

Sung Ju Hwang, Fei Sha and Kristen Grauman

The University of Texas at Austin

University of Southern California

1) Idea

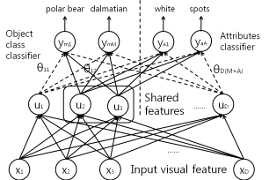

Main idea. The main idea is to build a model where object categories and their human-defined visual attributes share a lowerdimensional representation (dashed lines indicate zero-valued connections), thereby allowing the attribute-level supervision to regularize the learned object models.

2) Approach

2.1) Learning Shared Features via Regularization

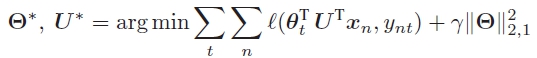

The objective function we want to minimize is as follows:

2.2) Convex Optimization

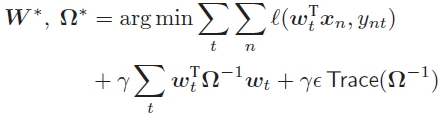

As the objective in 2.1) is not convex, due to the (2,1)-norm, and possibly by the choice of loss function we use, we instead solve the equivalent optimization problem of the form:

2.3) Extension to Kernel Classifiers

2.4) Implementation

The implementation is done in Matlab and is based on the implementation of the convex multitask feature learning by Andreas Argyriou3) Results

3.1) Datasets

We use two datasets: Animals with Attributes, and Ourdoor Scene Recognition dataset| Dataset | Number of images | Number of classes | Number of attributes |

| Animals with Attributes | 30,475 | 50 | 85 |

| Outdoor Scene Recognition Dataset | 2,688 | 8 | 6 |

Table 1. Dataset statistics

3.2) Impact of Sharing Features

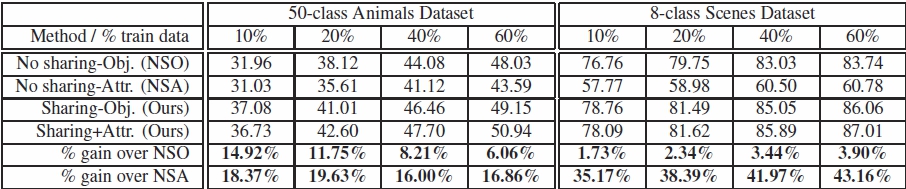

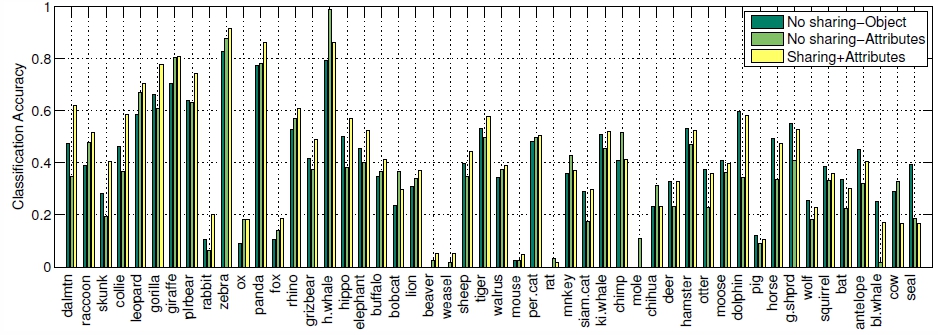

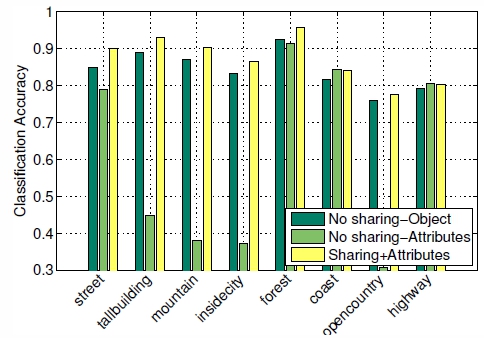

We define two baselines. No Sharing-Object (NSO): a traditional multi-class object recognition approach using an SVM with a chi-square kernel, and No Sharing-Attributes (NSA): an approach that treats attributes as intermediate features, where we train SVMs on attributes to predict their labels, and then performing direct attributes predictions. We evaluate the object recognition accuracy of our approach and the baselines on two datasets, on four training splits of increasing size (10% to 60%)

Table 2. Accuracy on both datasets as a function of training set size.

Accuracy on AWA classes.

Accuracy on OSR classes.

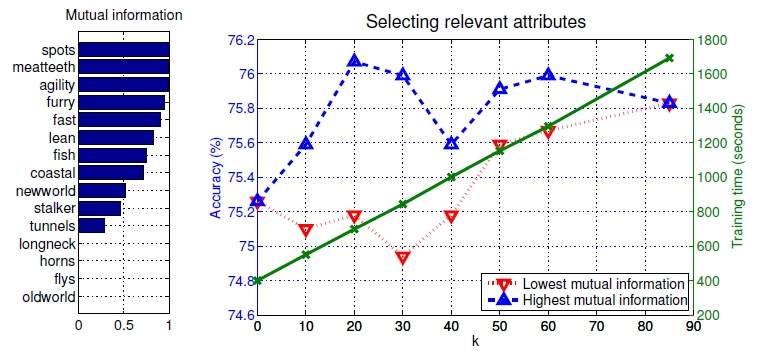

3.3) Selecting Relevant Attributes

Not all attributes will benefit feature sharing, and some may be even deterimental. Then how can we select the attributes that can actually help object class recognition? We can simply compute all the attributes by their mutual information to the classes we want to recognize, rank them and drop the attributes with low mutual information. The experimental results on the attributes sorted by their mutual information confirms that this simple method could actually improve object recognition or reduce training time.

Mutual information of selected classes and the accuracy as a function of the number of attributes sorted by mutual information.

Publication

Sharing Features Between Objects and Their Attributes. [pdf]Sung Ju Hwang, Fei Sha and Kristen Grauman

To Appear, In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR),

Colorado Springs, CO, June 2011