Inferring

Analogous Attributes

Chao-Yeh Chen and

Kristen Grauman

The University of Texas at Austin

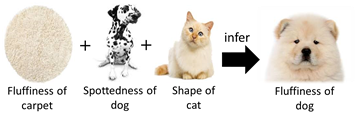

The appearance of an attribute can vary

considerably from class to class (e.g., a “fluffy” dog vs. a “fluffy” towel), making

standard class-independent attribute models break down. Yet, training

object-specific models for each attribute can be impractical, and defeats the

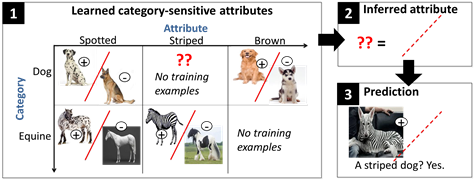

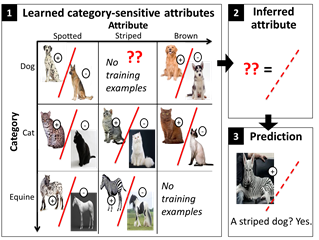

purpose of using attributes to bridge category boundaries. We propose a novel

form of transfer learning that addresses this dilemma. We develop a tensor

factorization approach which, given a sparse set of class-specific attribute

classifiers, can infer new ones for object-attribute pairs unobserved during training.

For example, even though the system has no labeled images of striped dogs, it

can use its knowledge of other attributes and objects to tailor “stripedness” to the dog category. With two large-scale

datasets, we demonstrate both the need for category-sensitive attributes as

well as our method’s successful transfer. Our inferred attribute classifiers

perform similarly well to those trained with the luxury of labeled

class-specific instances, and much better than those restricted to traditional

modes of transfer.

Problem: want to learn category

sensitive attributes

- Attributes: visual properties that describe objects and transcend object and scene

category boundaries.

- Status quo approach: learn a

single attribute classifier with data from all possible object/scene categories.

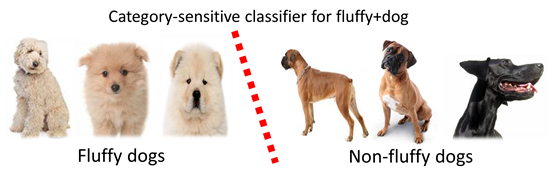

But are attributes truly category-independent? Below we show an

example that the appearance of an attribute can vary considerably from class to

class (e.g., a “fluffy” dog vs. a “fluffy” towel).

|

|

|

An intuitive but impractical solution…

- Category-sensitive attributes: learn a

separate attribute classifier for each object category.

Learn

category-sensitive fluffy attribute for dog by positive and negative examples.

Flaws:

- Ignore semantic sharing that some attributes do possess.

- Make unrealistic assumptions about training data availability.

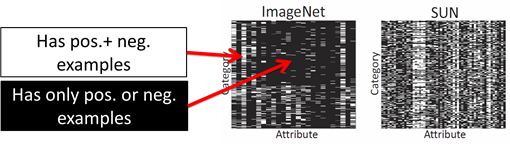

The data availability

for training category-sensitive attributes in ImageNet

and SUN datasets.

Approach

Overview

-Infer the pose in missing view with tensor

completion.

(1)

Learning

category-sensitive attributes

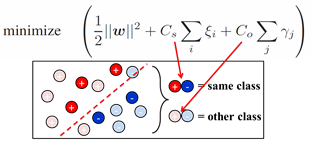

- Importance-weighted support vector machine (SVM) to

train a category-sensitive attribute.

-

Attributes’ visual cues are shared among some objects, but the sharing

is not universal.

(2)

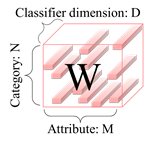

Object-attribute

classifier tensor

- Construct a tensor

comprised of the sparse set of explicitly trained category-sensitive attribute

classifiers

- Each W(n,m,:) is

a category-sensitive SVM weight vector trained for the n-th

object and m-th attribute.

- Due to label availability, the tensor is

sparse (75% missing values).

- For non-linear classifiers, we use explicit

kernel maps.

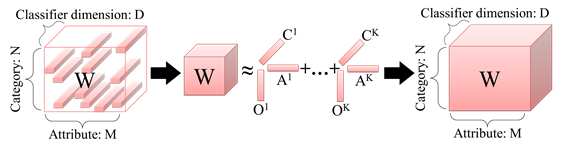

(3)

Inferring analogous attributes

- Apply Bayesian

probabilistic tensor factorization [Xiong et al.

2010].

- Use recovered latent factors to impute unobserved classifier

parameters.

(4)

Discussion

![]()

- Analogous attributes

transfer information from multiple objects and attributes.

- Novel transfer idea: tensor

completion to infer classifiers “untrainable” from data.

- Assume some common

structure among the explicitly trained category-sensitive models.

Results

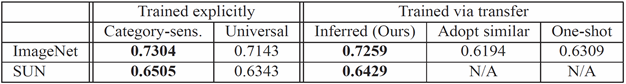

Accuracy (mAP) of attribute prediction

- We infer classifiers for 26K attributes.

Category-sensitive baseline impossible for 70% of them!

- Our gains over Universal average 0.13 in AP for 79% of cases.

Accuracy

(mAP) of attribute prediction. Total 664 categories x 84 attributes.

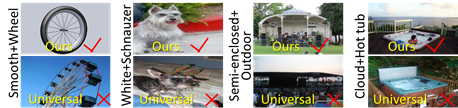

Example

predictions

Test images that

our method (top row) and the universal method (bottom row) predicted most

confidently as having the named attribute. (X = positive for the attribute, check = negative, according

to ground truth.)

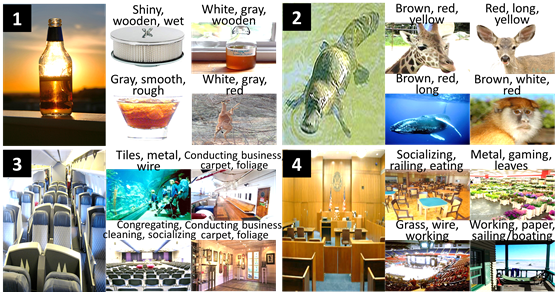

Discovered

analogous attributes

- Which categories are found to be analogous for an

attribute? Each example shows nearby category in latent space and the most

similar attributes.

Analogous attribute examples for ImageNet (top) and SUN (bottom). Words above each neighbor

indicate the 3 most similar attributes (learned or inferred) between leftmost

query category and its neighboring categories in latent space. Query category: neighbor

category= 1.Bottle: filter, syrup, bullshot, gerenuk.

2.Platypus: giraffe, ungulate, rorqual,

patas. 3.Airplane cabin: aquarium,

boat deck, conference center, art studio. 4.Courtroom:

cardroom, florist shop, performance arena, beach

house.

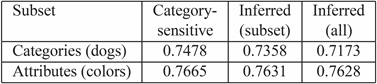

Focusing on semantically close data

- Could side information about relatedness further help ease transfer? Yes. If we restrict tensor to

closely related objects and closely related attributes, obtain even better

results.

Attribute label prediction mAP when restricting the tensor to semantically close

classes. The explicitly trained category-sensitive classifiers serve as an

upper bound.

Inferring nonlinear

classifiers

- We use the homogeneous kernel map of order

3 to approximate chi-square kernel nonlinear SVM.

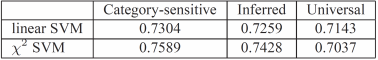

Results

using kernel maps to infer non-linear SVMs.

Conclusion

- Attributes are not strictly

category-independent àNeed category-sensitive attributes.

- Infer analogous classifiers from observed classifiers organized by

inter-related label spaces.

- Enables category-sensitive training, even when category-specific labeled data

not possible.

Download

- Paper, Supp

- Poster

- Bibtex