Abstract

Existing methods to learn visual attributes are prone to learning the wrong thing---namely, properties that are correlated with the attribute of interest among training samples. Yet, many proposed applications of attributes rely on being able to learn the correct semantic concept corresponding to each attribute. We propose to resolve such confusions by jointly learning decorrelated, discriminative attribute models. Leveraging side information about semantic relatedness, we develop a multi-task learning approach that uses structured sparsity to encourage feature competition among unrelated attributes and feature sharing among related attributes. On three challenging datasets, we show that accounting for structure in the visual attribute space is key to learning attribute models that preserve semantics, yielding improved generalizability that helps in the recognition and discovery of unseen object categories.

Motivation: The curse of correlation

Semantic visual attributes are supposed to be shareable across categories, and in a lot of their envisioned applications, they are expected to be detected correctly in novel settings entirely different from the attribute training data. Yet, the status quo independent attribute classifier training pipeline ignores this, and is content with learning properties correlated with the semantic attribute on the training data.

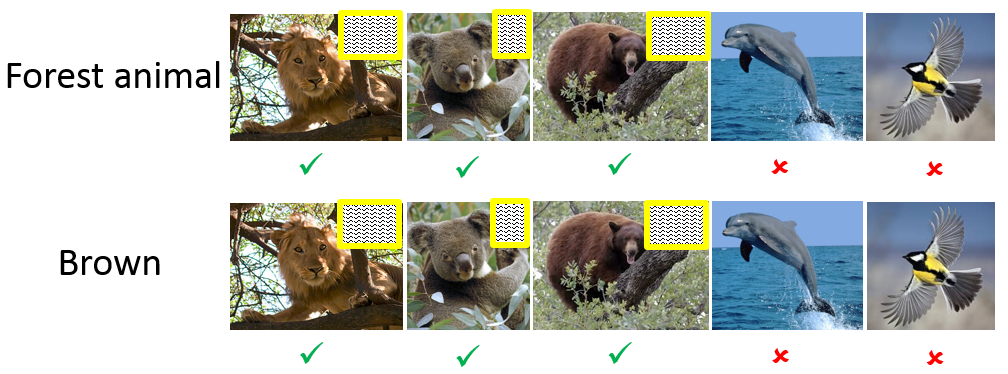

Figure: Given the above training data and no other information, can you figure out which concept to learn? Could it be, say, brown? What about furry? forest animal? Or maybe combinations of these?

Figure: Given the above training data and no other information, can you figure out which concept to learn? Could it be, say, brown? What about furry? forest animal? Or maybe combinations of these?

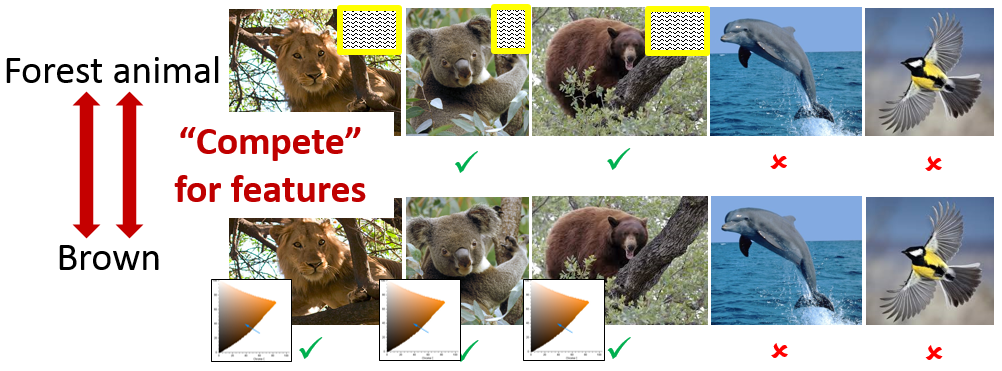

Figure: As an extreme case, suppose the same training image set is fed into a system to train both forest animal and brown, the standard learner simply learns the same concept for both, so that at the very least, it will be wrong on one of the two. In the example in the figure, the standard learner uses tree-like patterns as cues for both forest animals and brown, i.e., the brown classifier is wrong.

Figure: As an extreme case, suppose the same training image set is fed into a system to train both forest animal and brown, the standard learner simply learns the same concept for both, so that at the very least, it will be wrong on one of the two. In the example in the figure, the standard learner uses tree-like patterns as cues for both forest animals and brown, i.e., the brown classifier is wrong.

Problem: Attributes that are correlated in the training data may easily be conflated by a learner.

Solution idea

Figure: Our idea is to encourage different attributes to use different features. By forcing the brown classifier and the forest animal classifier to compete for features, we will hopefully avoid conflations and learn what it truly means to be brown. In the above image, the brown classifier correctly selects the color histogram features.

Figure: Our idea is to encourage different attributes to use different features. By forcing the brown classifier and the forest animal classifier to compete for features, we will hopefully avoid conflations and learn what it truly means to be brown. In the above image, the brown classifier correctly selects the color histogram features.

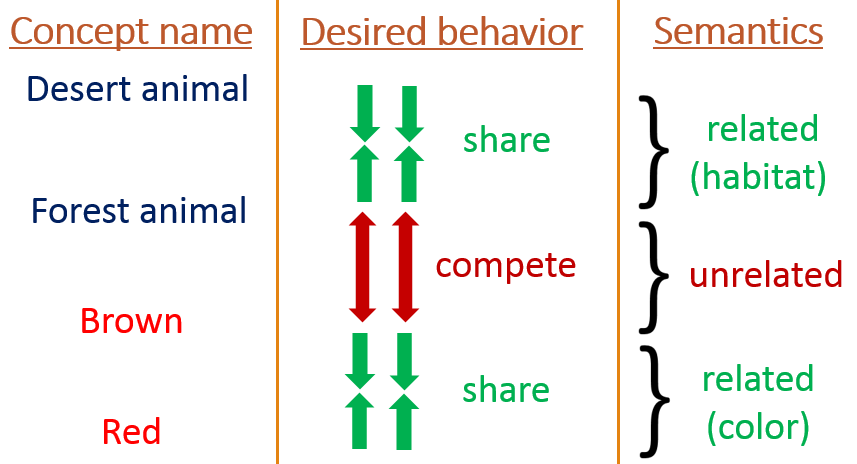

Figure: The key to our approach is to jointly learn all attributes in a vocabulary, while enforcing a structured sparsity prior that aligns feature sharing patterns with semantically close attributes and feature competition with semantically distant ones.

Figure: The key to our approach is to jointly learn all attributes in a vocabulary, while enforcing a structured sparsity prior that aligns feature sharing patterns with semantically close attributes and feature competition with semantically distant ones.

Inputs

- Training data: (image, binary attribute label) training tuples for each attribute

- Attribute relationships: "groups" of attributes, potentially overlapping, that cover the full vocabulary of attributes to be learned. These groups encode attribute relationships: group co-membership indicates semantic relatedness.

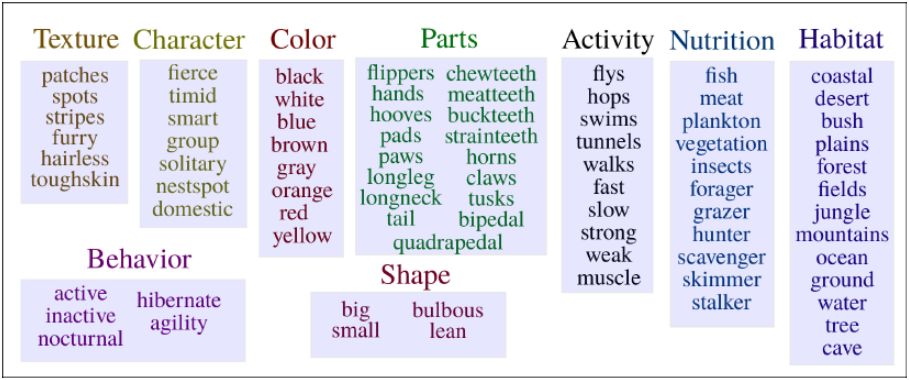

Figure: A grouping of attributes from the widely used Animals with Attributes dataset, proposed by Lampert et. al.. Each group has some semantically related attributes. Further, we notice that each group may be reasonably expected to share some features e.g., the Habitat group shares features from specific spatial channels around the peripheries of animal images, while the Color group relies on color-based features. See supplementary material for specification of groups on other datasets.

In our formulation, these groups are only used to enforce a soft prior over attributes while learning them jointly, rather than hard constraints. There may not be one ground truth grouping; instead, our experiments indicate that most reasonable semantic grouping schemes result in informative groupings that can be exploited beneficially by our method.

Figure: A grouping of attributes from the widely used Animals with Attributes dataset, proposed by Lampert et. al.. Each group has some semantically related attributes. Further, we notice that each group may be reasonably expected to share some features e.g., the Habitat group shares features from specific spatial channels around the peripheries of animal images, while the Color group relies on color-based features. See supplementary material for specification of groups on other datasets.

In our formulation, these groups are only used to enforce a soft prior over attributes while learning them jointly, rather than hard constraints. There may not be one ground truth grouping; instead, our experiments indicate that most reasonable semantic grouping schemes result in informative groupings that can be exploited beneficially by our method.

Outputs

- a logistic regression classifier model i.e. a weight vector for each attribute in the vocabulary

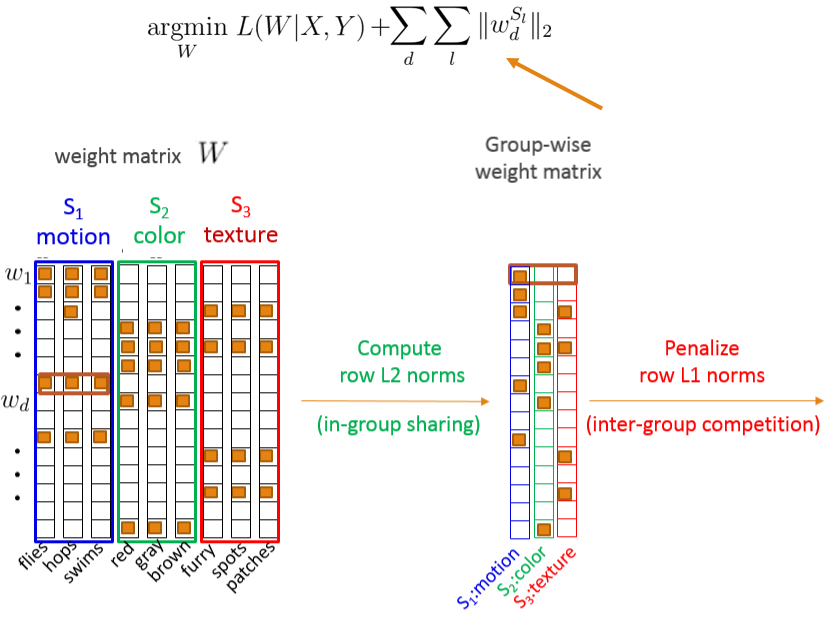

Formulation

Main result: attribute detection

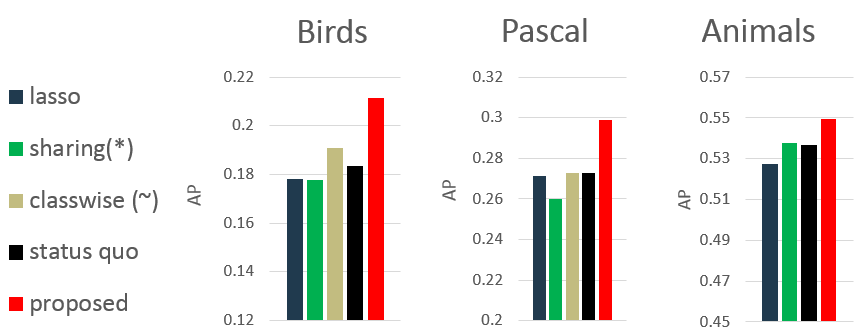

Figure: By decorrelating attributes, our attribute detectors generalize much better than previous approaches to novel unseen categories.

Figure: By decorrelating attributes, our attribute detectors generalize much better than previous approaches to novel unseen categories.

See the paper for more extensive results, including attribute detection and localization examples, plus tests of attribute classifier applicability to high-level tasks like zero-shot recognition and category discovery.

Bibtex

@inproceedings{dinesh-cvpr2014,

author = {D. Jayaraman and F. Sha and K. Grauman},

title = {{Decorrelating Semantic Visual Attributes by Resisting the Urge to Share}},

booktitle = {CVPR},

year = {2014}

}