ShadowDraw: Real-Time User

Guidance for Freehand Drawing

Yong

Jae Lee, Larry Zitnick, and

Michael Cohen

[SIGGRAPH

2011

paper] [slides] [video] [youtube]

[data]

Abstract

We present ShadowDraw,

a system for guiding the freeform drawing of objects. As the user draws, ShadowDraw

dynamically updates a shadow

image

underlying the user's strokes. The shadows are suggestive of

object contours

that guide the user as they continue drawing.

This paradigm is similar to tracing, with two major

differences. First,

we do not provide a single image from

which the user can trace; rather ShadowDraw

automatically blends relevant images from a large database to

construct the

shadows. Second,

the system dynamically

adapts to the user's drawings in real-time and produces

suggestions

accordingly. ShadowDraw works by

efficiently matching

local edge patches between the query, constructed from the

current drawing, and

a database of images. A hashing technique enforces both local

and global

similarity and provides sufficient speed for interactive

feedback. Shadows

are created by aggregating the top

retrieved edge maps, spatially weighted by their match scores.

We test our

approach with human subjects and show comparisons between the

drawings that

were produced with and without the system. The results show

that our system

produces more realistically proportioned line drawings.

Approach

ShadowDraw

includes three main

components: (1)

the construction of an

inverted file structure that indexes a database of images and

their edge maps;

(2) a query method that, given user strokes, dynamically

retrieves matching

images, aligns them to the evolving drawing and weights them

based on a

matching score; and (3) the user interface, which displays a

shadow of weighted

edge maps beneath the user's drawing to help guide the drawing

process.

Database

Creation

Examples

of database images and

corresponding edge maps

We use a set of

approximately 30,000 natural images collected from the

internet via

approximately 40 categorical queries such as “t-shirt”,

“bicycle”, “car”,

etc. Although

such images have many

extraneous backgrounds, objects, framing lines, etc., the

expectation is that,

on average, they will contain edges a user may want to draw.

We

process each image in three stages and

add to an inverted file structure.

First, we extract edges from the image using the long

edge detector

described in [Bhat et al., TOG

2009]. Next, we

extract patches uniformly on a grid

and compute Binary Coherent Edge (BiCE)

descriptors for each patch [Zitnick,

ECCV 2010]. Finally,

for each patch, we compute

concatenated sets of min-hashes called sketches

and add them to the database. The database is stored as an

inverted file, in

other words, indexed by sketch value, which in turn points to

the original

image and the patch location.

Image

Matching and

Retrieval

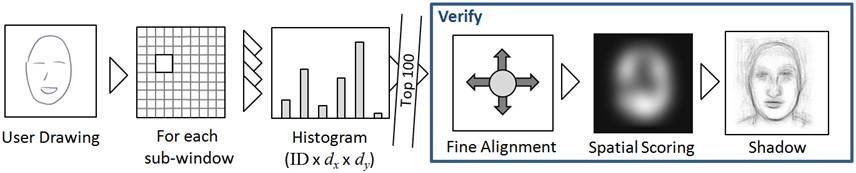

The

figure above shows the real-time

matching pipeline between the database images and the user's

drawing. Our

hashing scheme allows for efficient

(real-time) image queries, since we only need to consider

images with matching

sketches. Initially,

we use the inverted

file structure to obtain a set of top 100 candidate matches. Next, we align and

score each candidate match

to the user's drawing. This

two step matching procedure is

necessary for computational

efficiency, since only a small subset of the database images

need to be finely

aligned and weighted. We

use the scores

from the alignment step to compute a set of spatially varying

weights for each

edge image. The

output is a shadow image

resulting from the weighted average of the edge images. Finally, we display

the shadow image to the

user beneath his/her drawing.

We take

advantage of the fact that the

user's strokes change gradually over time to increase

performance. At

each time step, only votes resulting from

sketches derived from patches that have changed are updated. The candidate image

set contains a set of

images with approximate matching locations arising from the

discretization of

the offsets in the grid of patches. We

refine these offsets using a 1D variation of the Generalized

Hough

transform. The

use of a spatial

weighting results in shadows that are a composite of multiple

distinct edge

images, creating the appearance of an object that does not

exist in a single

database image.

User

Interface

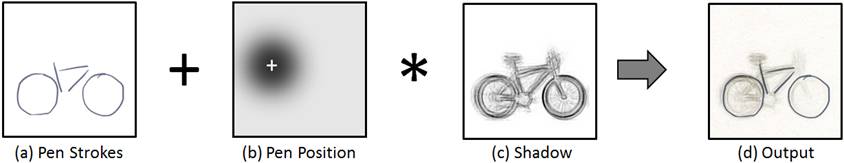

The

user

can draw or erase strokes using a stylus. The user sees their

own drawing

formed with pen strokes superimposed on a continuously updated

shadow

image. To make

the shadow visible to the

user, while not distracting from the user's actual drawing, we

filter the

shadow image to remove noisy and faint edges.

Finally, we weight the shadow image to have higher

contrast near the

user's cursor position.

Results

All our

experiments are run using a database

of approximately 30,000 images.

Using

our large database, the user can receive guidance for a

variety of object

categories, including specific types of objects such as office

chairs, folding

chairs, or rocking chairs.

On average, a

new shadow image is computed every 0.4 to 0.9 seconds

depending on the number

of new strokes. A

fast response is

critical in creating a positive feedback loop in which the

user obtains

suggestions while still in the process of drawing a stroke. As the user draws

new strokes and erases

others, the shadows dynamically update to best match the

user's drawing. The

last row of the figure above demonstrates

the scoring function's robustness to clutter.

Even when many spurious strokes are drawn, the correct

images are given

high weight in the shadow image.

Please

refer to our video

to see the sessions in action and for more results.

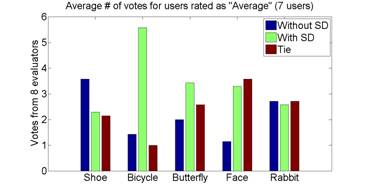

User

Studies

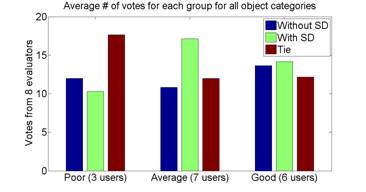

We

conducted a user study to assess the

effectiveness of ShadowDraw on

untrained

drawers. In the

first stage, subjects

produced quick 1-3 minute drawings with and without ShadowDraw. In a second stage, a

separate set of subjects

evaluated the drawings.

Overall,

ShadowDraw

achieves significantly higher scored drawing results for the

“average”

group. The users

in this group are able

to draw the basic shapes and rough proportions of the objects

correctly, but

have difficulty applying exact proportions and details

essential for producing

compelling drawings, which is precisely where ShadowDraw

can help.

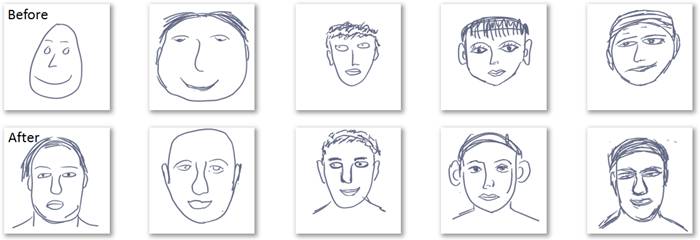

The

figure above shows some examples of the

users' drawings of faces before and after practice with ShadowDraw. There is a

noticeable change towards

realistic proportions in the drawings for those with poor

skill (left) and good

skill (right). Notice

how the subject's

personal style is maintained between drawings, and that the

more proficient

drawers are not simply tracing the shadows.

Publication

ShadowDraw:

Real-Time User Guidance for Freehand Drawing

[pdf]

[slides]

[video]

[youtube]

[data]

Yong

Jae Lee, Larry Zitnick,

and Michael Cohen

ACM

Transactions on Graphics (Proceedings of

SIGGRAPH),

Vancouver, Canada, August 2011.