| Sagnik Majumder1, Changan Chen1,2*, Ziad Al-Halah1*, Kristen Grauman1,2 |

|

1UT Austin,2FAIR * Equal contribution Accepted to NeurIPS 2022 |

|

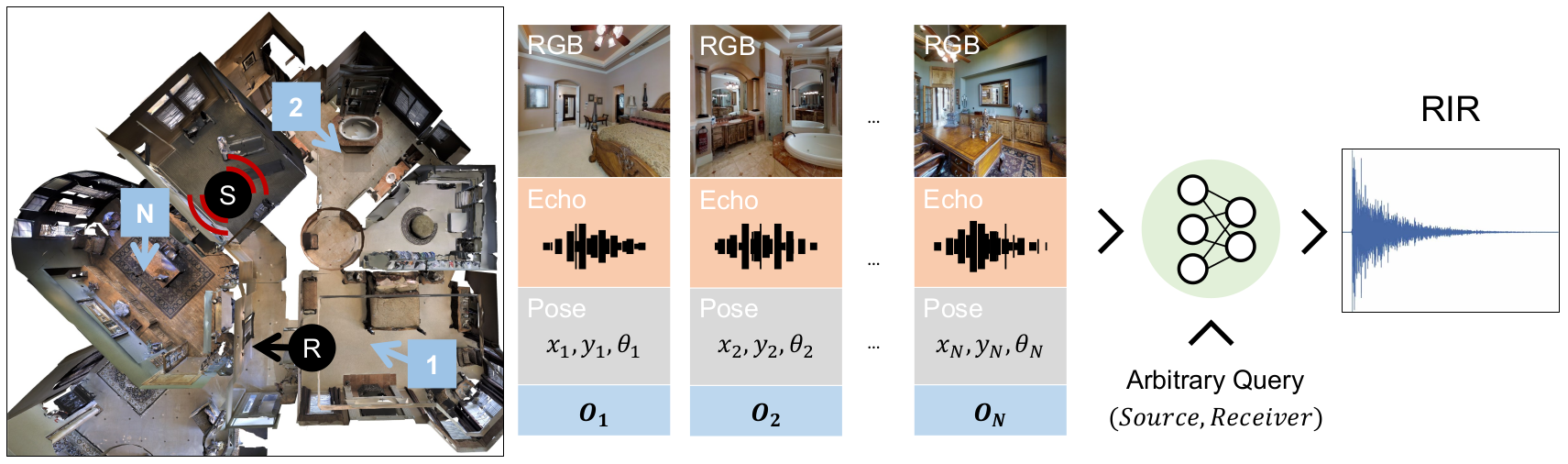

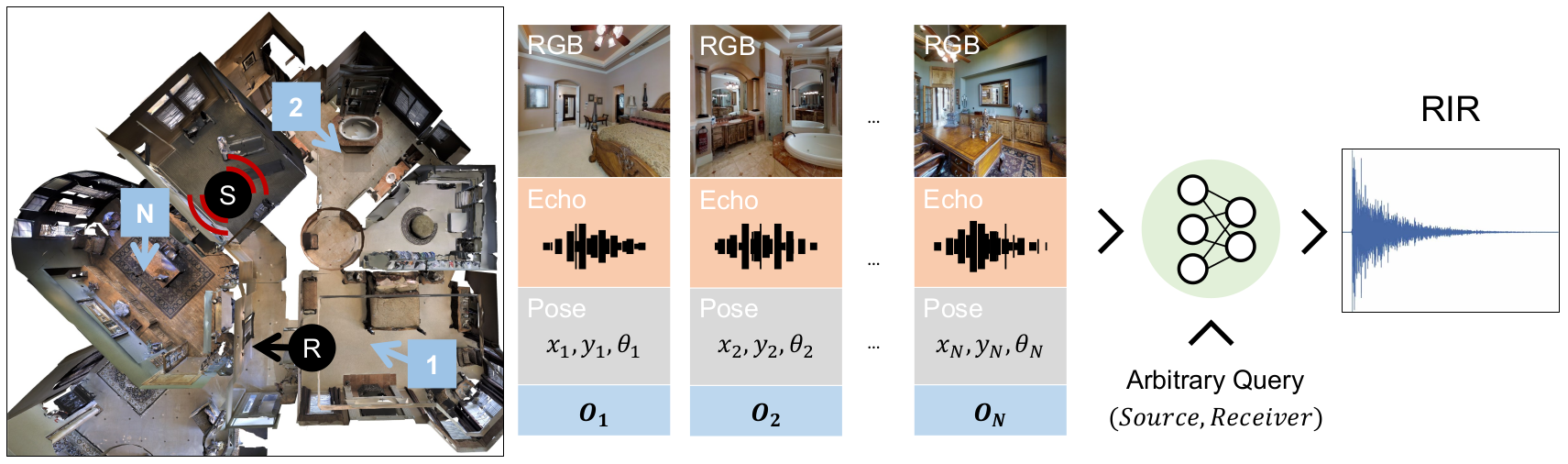

| Room impulse response (RIR) functions capture how the surrounding physical environment transforms the sounds heard by a listener, with implications for various applications in AR, VR, and robotics. Whereas traditional methods to estimate RIRs assume dense geometry and/or sound measurements throughout the environment, we explore how to infer RIRs based on a sparse set of images and echoes observed in the space. Towards that goal, we introduce a transformer-based method that uses self-attention to build a rich acoustic context, then predicts RIRs of arbitrary query source-receiver locations through cross-attention. Additionally, we design a novel training objective that improves the match in the acoustic signature between the RIR predictions and the targets. In experiments using a state-of-the-art audio-visual simulator for 3D environments, we demonstrate that our method successfully generates arbitrary RIRs, outperforming state-of-the-art methods and---in a major departure from traditional methods---generalizing to novel environments in a few-shot manner. |

|

Task description, prediction examples and downstream applications.

|

|

|

@inproceedings{

majumder2022fewshot,

title={Few-Shot Audio-Visual Learning of Environment Acoustics},

author={Sagnik Majumder and Changan Chen and Ziad Al-Halah and Kristen Grauman},

booktitle={Advances in Neural Information Processing Systems},

editor={Alice H. Oh and Alekh Agarwal and Danielle Belgrave and Kyunghyun Cho},

year={2022},

url={https://openreview.net/forum?id=PIXGY1WgU-S}

}

|

| Thanks to Tushar Nagarajan and Kumar Ashutosh for feedback on paper drafts. UT Austin is supported in part by the IFML NSF AI Institute, NSF CCRI, and DARPA L2M. K.G. is paid as a research scientist by Meta, and C.C. was a visiting student researcher at FAIR when this work was done. |

| Copyright © 2022 University of Texas at Austin |