| Arjun Somayazulu1, Changan Chen1, Kristen Grauman1,2 |

|

1UT Austin 2FAIR, Meta Accepted at NeurIPS 2023 |

|

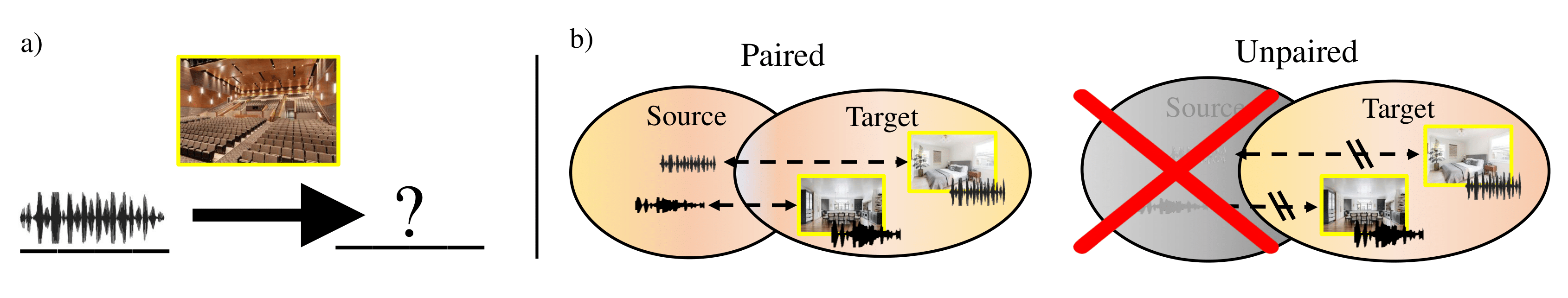

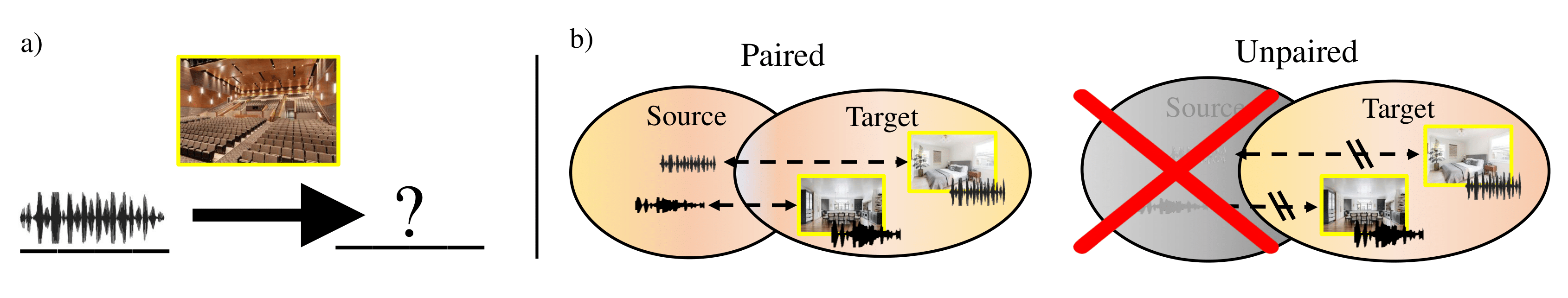

| Acoustic matching aims to re-synthesize an audio clip to sound as if it were recorded in a target acoustic environment. Existing methods assume access to paired training data, where the audio is observed in both source and target environments, but this limits the diversity of training data or requires the use of simulated data or heuristics to create paired samples. We propose a self-supervised approach to visual acoustic matching where training samples include only the target scene image and audio---without acoustically mismatched source audio for reference. Our approach jointly learns to disentangle room acoustics and re-synthesize audio into the target environment, via a conditional GAN framework and a novel metric that quantifies the level of residual acoustic information in the de-biased audio. Training with either in-the-wild web data or simulated data, we demonstrate it outperforms the state-of-the-art on multiple challenging datasets and a wide variety of real-world audio and environments. |

|

|

|

Visual Acoustic Matching examples on synthetic data and Web videos, as well as a challenging cross-domain evaluation, compared agains the prior state-of-the-art.

|

|

|

| (1) Changan Chen, Ruohan Gao, Paul Calamia, Kristen Grauman. Visual Acoustic Matching. In CVPR 2022 [Bibtex] |

| (2) Nikhil Singh, Jeff Mentch, Jerry Ng, Matthew Beveridge, Iddo Drori. Cross-Modal Reverb Impulse Response Synthesis. In ICCV 2021 [Bibtex] |

| (3) Changan Chen, Alexander Richard, Roman Shapovalov, Vamsi Krishna Ithapu, Natalia Neverova, Kristen Grauman, Andrea Vedaldi. Novel-View Acoustic Synthesis. In CVPR 2023 [Bibtex] |

| (1) Changan Chen*, Unnat Jain*, Carl Schissler, Sebastia Vicenc Amengual Gari, Ziad Al-Halah, Vamsi Krishna Ithapu, Philip Robinson, Kristen Grauman. SoundSpaces: Audio-Visual Navigation in 3D Environments. In ECCV 2020 [Bibtex] |

| (5) Ariel Ephrat, Inbar Mosseri, Oran Lang, Tali Dekel, Kevin Wilson, Avinatan Hassidim, William T. Freeman, Michael Rubinstein. Looking to Listen at the Cocktail Party: A Speaker-Independent Audio-Visual Model for Speech Separation. In SIGGRAPH 2018 [Bibtex] |

| Thanks to Ami Baid for help in data collection. UT Austin is supported in part by the IFML NSF AI Institute. K.G. is paid as a research scientist at Meta. |

| Copyright © 2023 University of Texas at Austin |