| Kumar Ashutosh1,2, Santhosh Kumar Ramakrishnan1, |

| Triantafyllos Afouras2, Kristen Grauman1,2 |

|

1UT Austin, 2FAIR, Meta NeurIPS 2023 |

|

|

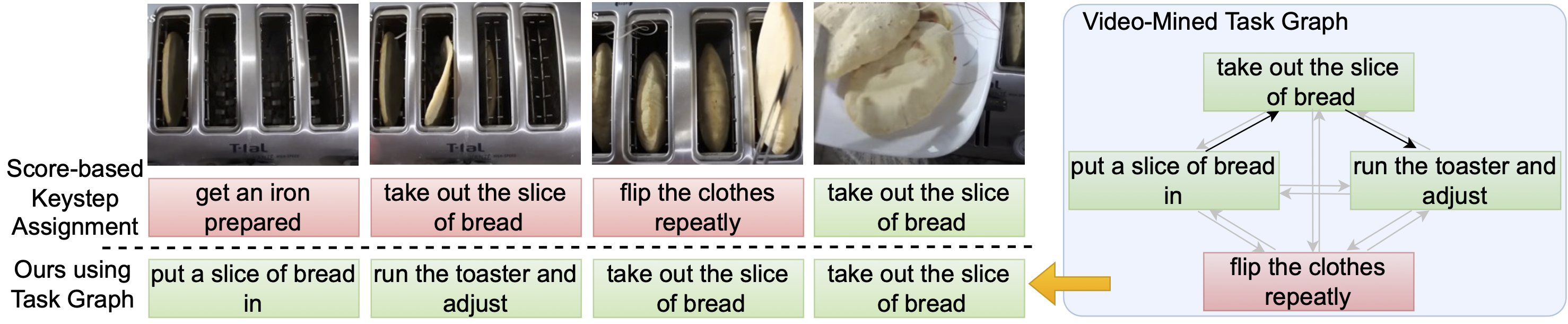

| Procedural activity understanding requires perceiving human actions in terms of a broader task, where multiple keysteps are performed in sequence across a long video to reach a final goal state---such as the steps of a recipe or a DIY fix-it task. Prior work largely treats keystep recognition in isolation of this broader structure, or else rigidly confines keysteps to align with a predefined sequential script. We propose discovering a task graph automatically from how-to videos to represent probabilistically how people tend to execute keysteps, and then leverage this graph to regularize keystep recognition in novel videos. On multiple datasets of real-world instructional videos, we show the impact: more reliable zero-shot keystep localization and improved video representation learning, exceeding the state of the art. |

|

|

|

|

@inproceedings{ashutosh2024videomined,

author = {Ashutosh, Kumar and Ramakrishnan, Santhosh Kumar and Afouras, Triantafyllos and Grauman, Kristen},

booktitle = {Advances in Neural Information Processing Systems},

editor = {A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine},

pages = {67833--67846},

publisher = {Curran Associates, Inc.},

title = {Video-Mined Task Graphs for Keystep Recognition in Instructional Videos},

url = {https://proceedings.neurips.cc/paper_files/paper/2023/file/d62e65cfdba247e0cd7cac5964f9fbd9-Paper-Conference.pdf},

volume = {36},

year = {2023}

}

|

| UT Austin is supported in part by the IFML NSF AI institute. KG is paid as a research scientist at Meta. We thank the authors of Distant Supervision and Paprika for releasing their codebases. |

| Copyright © 2023 University of Texas at Austin |