Recorded videos: morning session, panel discussion (we forgot the afternoon session, sorry!).

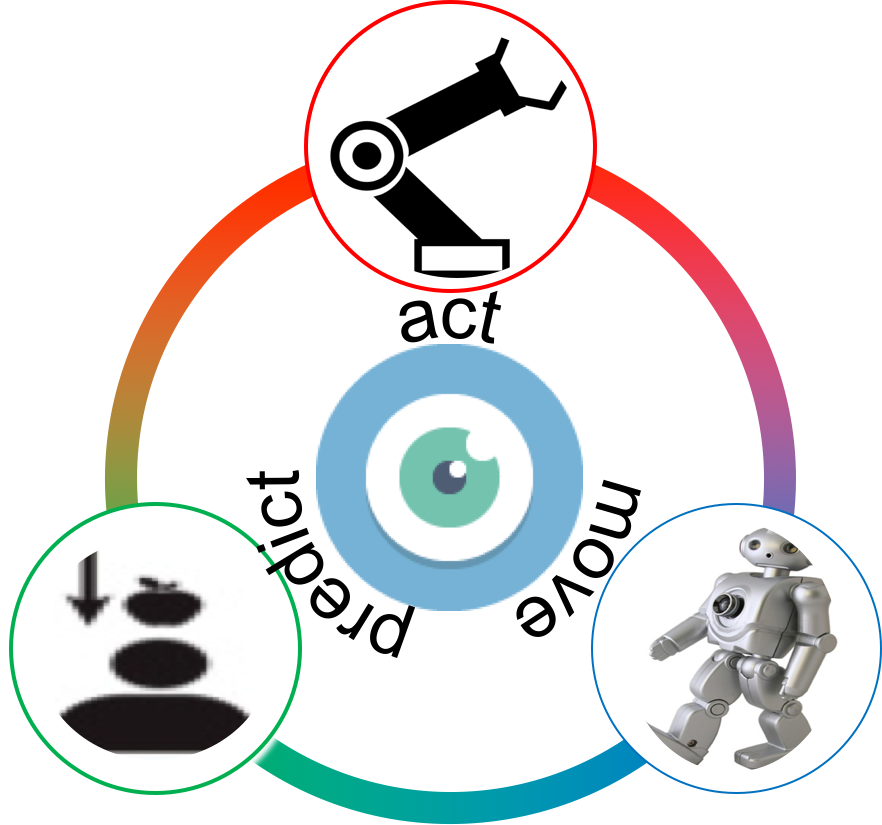

In the only context in which we have observed intelligence, namely the biological context, the emergence of intelligence and superior visual abilities in different families of animals has each time been closely tied to the emergence of the ability to move and act in their environments [Moravec'84]. Cognitive scientists have also empirically verified that self-generated motions are critical to the development of visual perceptual skills in animals [Held'63], and a sizeable research program in cognitive science studies "embodied cognition" --- the hypothesis that cognition is strongly influenced by aspects of an agent's body beyond the brain itself [Wilson'02].

Progress in standard visual recognition tasks in the last few years has been largely fueled by access to today's largest painstakingly curated and hand-labeled datasets. Images sampled independently from the web are manually assigned to one of several categories by thousands of human workers to create these datasets. This cumbersome process may be replaceable. Specifically, an agent continuously acting, moving and monitoring its environment has available to it many avenues of knowledge, going well beyond what can be learned from observing only orderless, i.i.d. ``bags of images'' with category labels like today's standard datasets. For instance, such an agent may exploit ordered image sequences i.e., its observed video stream, with freely available image \leftrightarrow image or image \leftrightarrow other sensor relationships. Such an agent can use the observed results of those actions as a form of self-acquired supervision. For instance, it may tap an object to determine its material properties ("action"), walk around it to observe a less ambiguous view of it ("motion"), or learn natural world physics by dropping, pushing or throwing objects and learning to anticipate their behavior ("anticipation"). These forms of supervision may allow agents to discover knowledge not available through the standard supervised paradigm.

Moreover, alleviating the non-scalable curation and labeling requirements involved in compiling today's standard datasets is a worthwhile goal in itself, so that all or most visual learning may happen without manual supervision. Replacing manual supervision with alternative forms of supervision in this manner would have many advantages: (1) it would open up the possibility of exploiting much larger datasets for visual learning. This could potentially drive even better-performing computer vision systems for conventional tasks, since the evidence over the last few years has suggested that visual learning benefits from ever-higher capacity models trained on ever-larger datasets, (2) it would enable easy development of visual applications for more narrow, non-standard domains for which large labeled datasets neither currently exist, nor are likely to be curated in the future, such as say, vision for an inter-planetary rover, and (3) compared to standard supervised learning, it more closely resembles what we know about the avenues of learning available to the biological visual systems we hope to ultimately emulate in performance, even if not in design.

In this workshop, we aim to focus on how action, motion and anticipation may all offer viable and important means for visual learning. Several closely intertwined emerging research directions touching on our theme are being concurrently and largely independently explored by researchers in the vision, machine learning, and robotics communities around the world. A major goal of our workshop will be to bring these researchers together, provide a forum to foster collaborations and exchange of ideas, and ultimately, to help advance research along these directions.

Call for Abstracts

We had invited 2-page abstracts describing relevant work that has been recently published, is in progress, or is to be presented at ECCV. Review of the submissions was double blind. While there will be no formal proceedings, accepted abstracts are posted here. Authors of accepted abstracts will present their work in a poster session at the workshop, and as short spotlight talks. We encouraged submissions from not only the vision community, but also from machine learning, robotics, cognitive science and other related disciplines. A representative, but not exhaustive list of topics of interest is shown below:

- Learning from observer motions accompanying video

- Models of dynamics of object trajectories/pose changes in video

- Unsupervised representation learning from video

- Predictive/anticipatory models of future video frames given past frames

- Learning from other sensor streams accompanying video

- Generative modeling of unobserved object/scene views conditional on observed views

- Reinforcement learning approaches for robotic control from pixels

- Temporal coherence modeling for learning from video sequences

- Sensorimotor learning with visual sensors

- Embodied cognition and learning applied to machines

- Inferring/modeling physics and physical properties in video

- Active vision

The LaTeX template for submission is posted here. Abstracts must be no longer than 2 pages (including references), and submitted on or before August 3, 11.59 p.m. US Central Time. Submission will be via the workshop CMT. (Submission is now closed.)

Important Dates

| August 3 | Abstracts due (closed) |

| August 19 | Reviews due |

| August 29 | Decision notifications to authors |

| September 12 | Final abstracts due |

| October 9 (tentatively 9 a.m. to 5 p.m.) | Workshop date |

Speakers

We have invited researchers from across different disciplines to bring their perspectives to the workshop. Here is our speaker list:

|

|

|

|

CMU |

UC Berkeley |

U Michigan |

|

|

|

|

UW |

MPI Tübingen |

Oxford |

Program

Recorded videos: morning session, panel discussion (we forgot the afternoon session, sorry!).

The workshop will be held at Oudemanhuispoort. Here is our program.

| 09.00 a.m. | Opening remarks |

| 09.15 a.m. | Invited Speaker 1: Jitendra Malik: "Acquiring mental models through perception, simulation and action." |

| 09.45 a.m. | Invited Speaker 2: Nando de Freitas: "Make learning off-policy again! Sample efficient actor-critic with experience replay." |

| 10.15 a.m. | Invited Speaker 3: Jeanette Bohg: "Interactive Perception for Perceptive Manipulation - or, how putting perception on a physical system changes everything." |

| 10.45 a.m. | Coffee break |

| 11.00 a.m. | Poster spotlights |

| 11.45 a.m. | Lunch break |

| 01.00 p.m. | Poster session |

| 02.00 p.m. | Invited Speaker 4: Abhinav Gupta: "Scaling Self-supervision: From one task, one robot to multiple tasks and robots " |

| 02.30 p.m. | Invited Speaker 5: Honglak Lee: "Learning Disentangled Representations for Prediction and Anticipation" |

| 03.00 p.m. | Invited Speaker 6: Ali Farhadi: "Towards Crowifying Vision" |

| 03.30 p.m. | Coffee break |

| 03.45 p.m. | Panel discussion with invited speakers |

Accepted Papers

The following papers will be presented at the workshop as spotlights and posters:

- Mohamed Daoudi, Maxime Devanne, Francois Quesque, Yann Coello. Computational Modeling of Human Social Intention.

- Carolina Redondo-Cabrera, Roberto Lopez-Sastre. Unsupervised Feature Learning from Videos for Discovering and Recognizing Actions.

- Bert De Brabandere, Xu Jia, Tinne Tuytelaars, Luc Van Gool. Dynamic Filter Networks for Predicting Unobserved Views.

- Timo Luddecke, Florentin Worgotter. Scene Affordance: Inferring Actions from Household Scenes.

- Senthil Purushwalkam, Abhinav Gupta. Pose from Action: Unsupervised Learning of Pose Features based on Motion.

- Georgios Mastorakis, Xavier Hildenbrand, Kevin Grand, Dimitrios Makris. Customisable Fall Detection: A hybrid approach using physics based simulation and machine learning.

- Sven Bambach, David Crandall, Linda Smith, Chen Yu. Active Vision: Learning Visual Objects through Egocentric Views of Children and Parents.

- Tomas Petricek, Vojtech Salansky, Karel Zimmermann, Tomas Svoboda. Simultaneous Exploration and Segmentation with Incomplete Data.

- Ilker Yildirim, Jiajun Wu, Yilun Du, Joshua Tenenbaum. Interpreting Dynamic Scenes by a Physics Engine and Bottom-Up Visual Cues.

- Jacob Walker, Carl Doersch, Abhinav Gupta, Martial Hebert. An Uncertain Future: Forecasting from Static Images using Variational Autoencoders.

- Tianfan Xue, Jiajun Wu, Katherine Bouman, Bill Freeman. Visual Dynamics: Probabilistic Future Frame Synthesis via Cross Convolutional Networks.

- Rui Shu, James Brofos, Frank Zhang, Mohammad Ghavamzadeh, Hung Bui, Mykel Kochenderfer. Stochastic Video Prediction with Conditional Density Estimation.

- Atabak Dehban, Lorenzo Jamone, Adam Kampff, Jose Santos-Victor. A Moderately Large Size Dataset to Learn Visual Affordances of Objects and Tools Using iCub Humanoid Robot.

- Jeff Donahue, Philipp Krahenbuhl, Trevor Darrell. Adversarial Feature Learning.

- Roeland de Geest, Efstratios Gavves, Amir Ghodrati, Zheynang Li, Cees Snoek, Tinne Tuytelaars. Online Action Detection.

Program committee

We thank our program committee for kindly donating their time and expertise towards reviewing workshop submissions:

Organizers

|

|

|

|

Dinesh Jayaraman UT Austin |

Kristen Grauman UT Austin |

Sergey Levine UW |

Sponsors

Please email Dinesh (dineshj [at] cs [dot] utexas [dot] edu) for information about sponsorship.