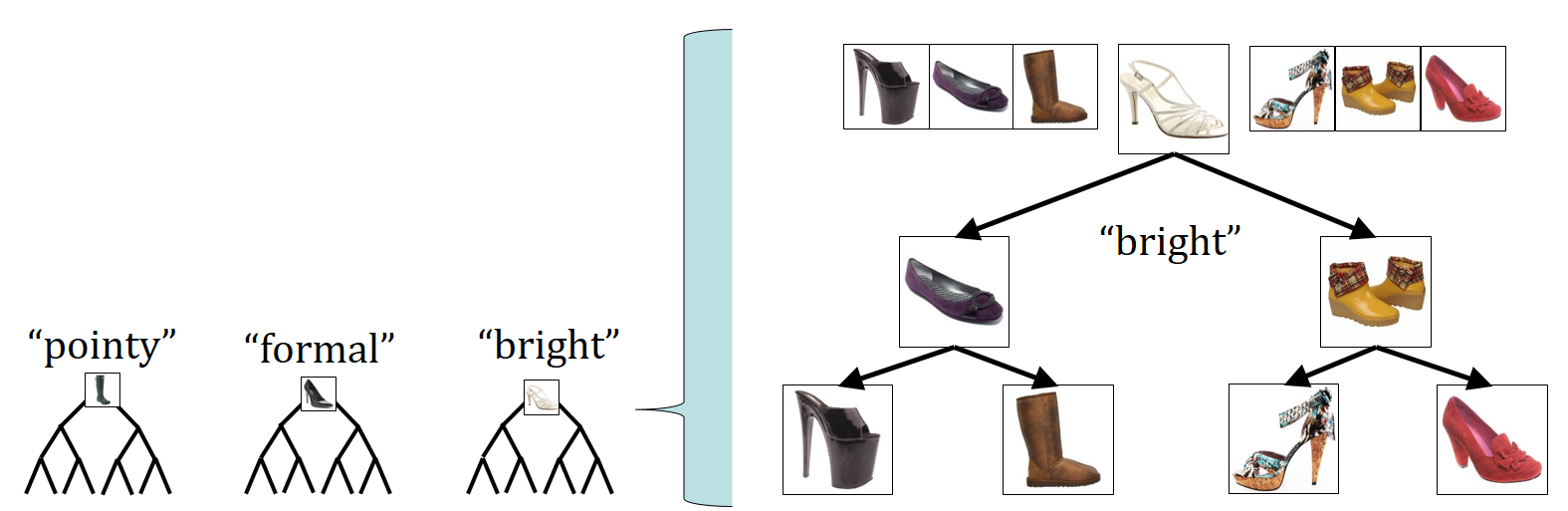

In interactive image search, a user iteratively refines his results by giving feedback on exemplar images. Active selection methods aim to elicit useful feedback, but traditional approaches suffer from expensive selection criteria and cannot predict informativeness reliably due to the imprecision of relevance feedback. To address these drawbacks, we propose to actively select "pivot" exemplars for which feedback in the form of a visual comparison will most reduce the system's uncertainty. For example, the system might ask, "Is your target image more or less crowded than this image?" Our approach relies on a series of binary search trees in relative attribute space, together with a selection function that predicts the information gain were the user to compare his envisioned target to the next node deeper in a given attribute's tree. It makes interactive search more efficient than existing strategies--both in terms of the system's selection time as well as the user's feedback effort.

Traditional relevance feedback methods focus on binary feedback:

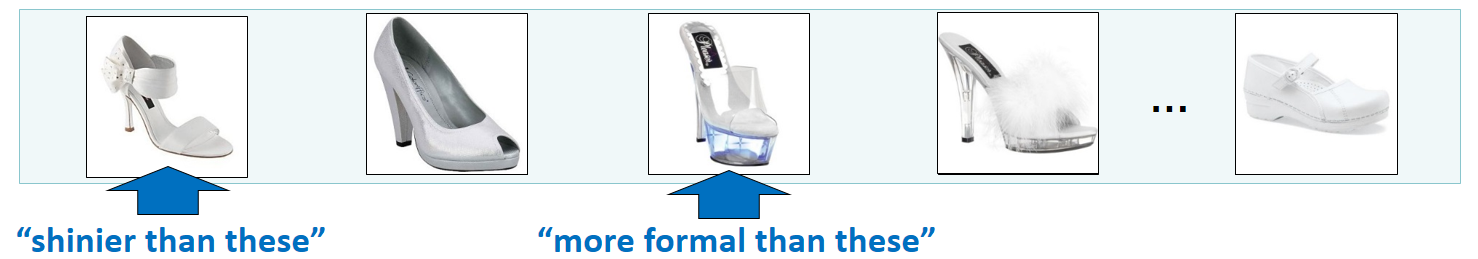

Attributes allow more precise semantic feedback:

But on which images would attribute feedback be most informative?

Relative attributes consitute learned ranking functions, one per attribute, which allow us to sort images from least to most having the attribute. See Parikh and Grauman, "Relative Attributes", ICCV 2011 for more information.

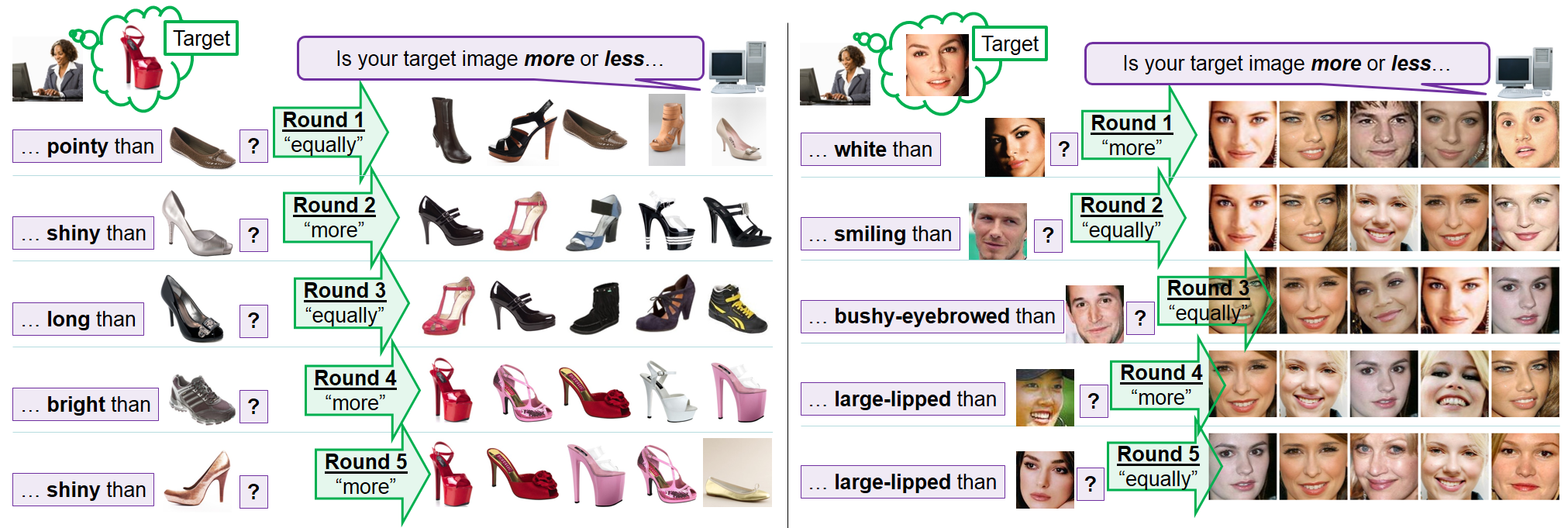

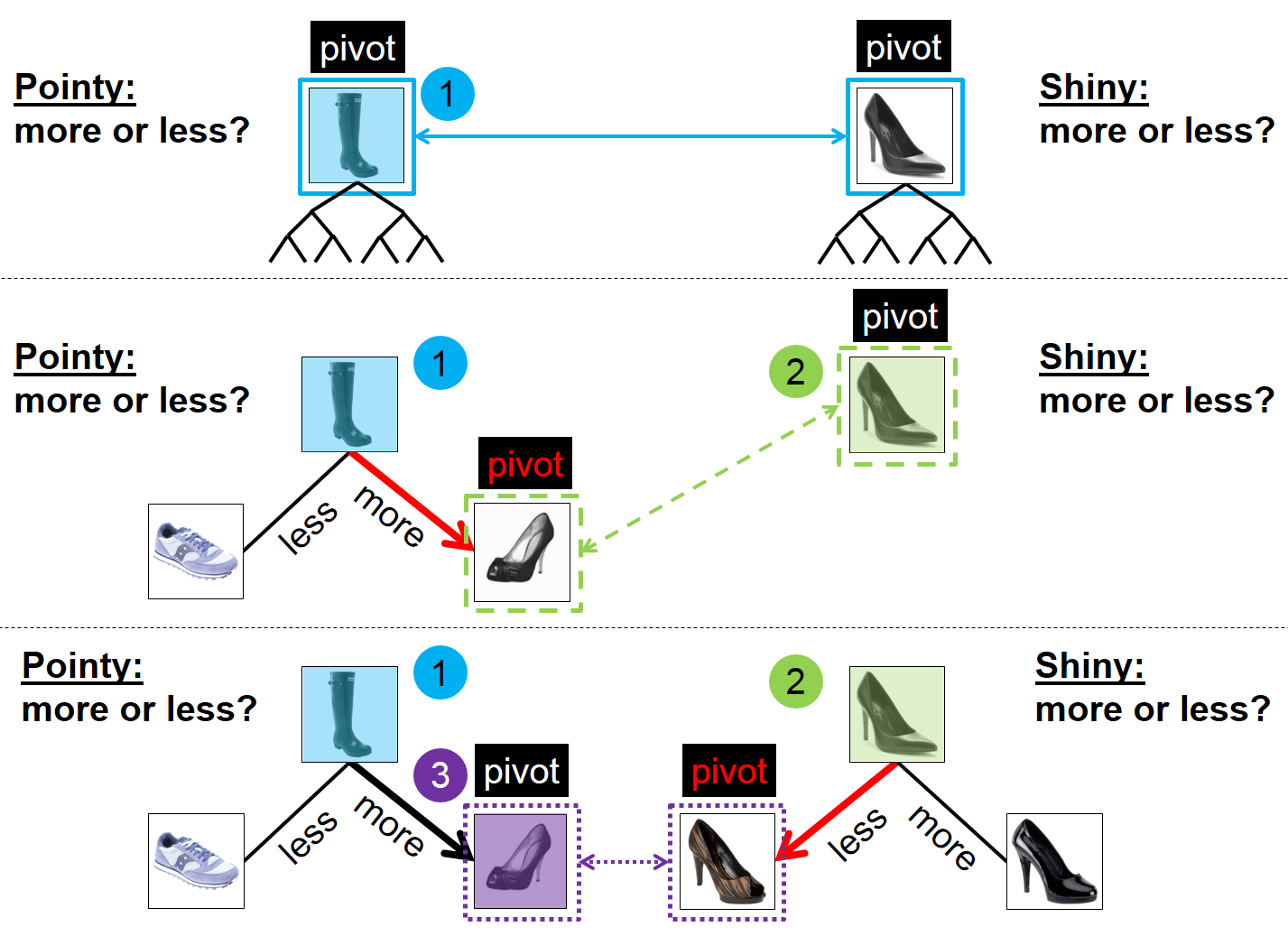

We find the series of useful comparisons, with a relative 20-questions-like game:

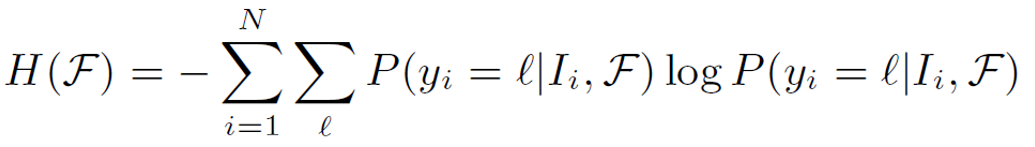

At each iteration, we compare a set of O(M) pivots (where M is the size of the attribute vocabulary), and pick the one which will lead to the highest expected information gain. We then request that the user compare his target image to the pivot with respect to the corresponding attribute. We update the pivot for that attribute to whichever child node the user response indicates ("less" means left, "more" means right, "equally" means stop exploring this attribute).

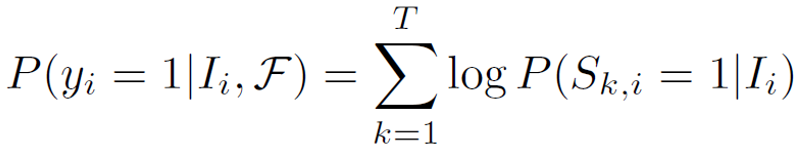

We estimate the relevance score for an image as:

where the probability that an image satisfies a given constraint is:

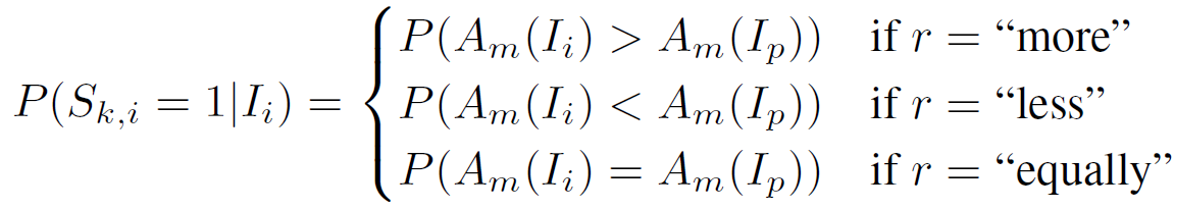

Then we measure the entropy of the current system (with respect to knowing which images are relevant and which are not) as:

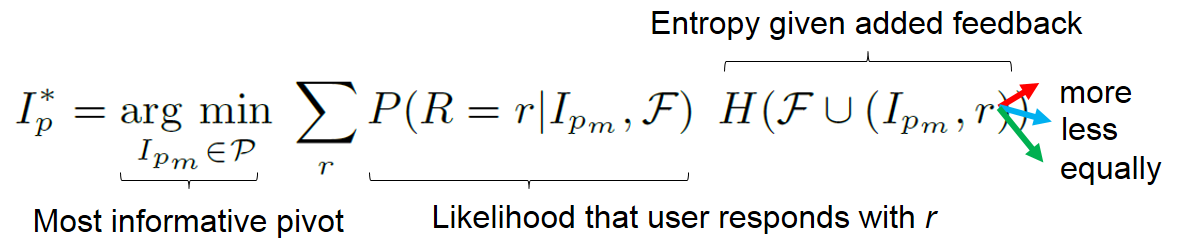

and we choose the next pivot comparison that minimizes the expected entropy:

We use the following datasets:

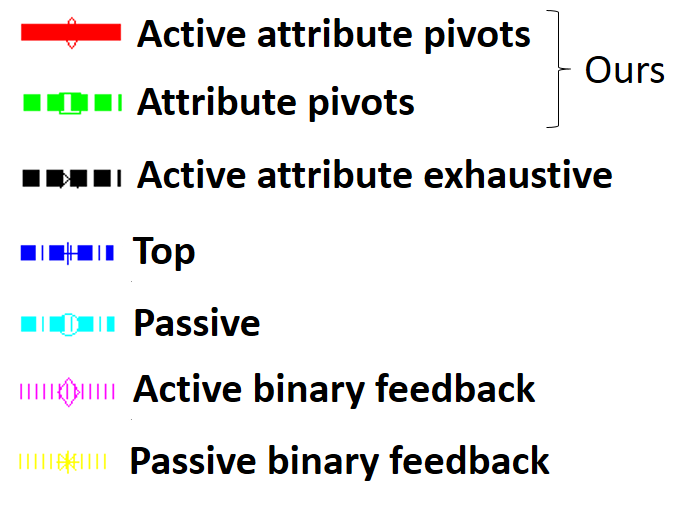

We compare our Active attribute pivots approach to the following methods:

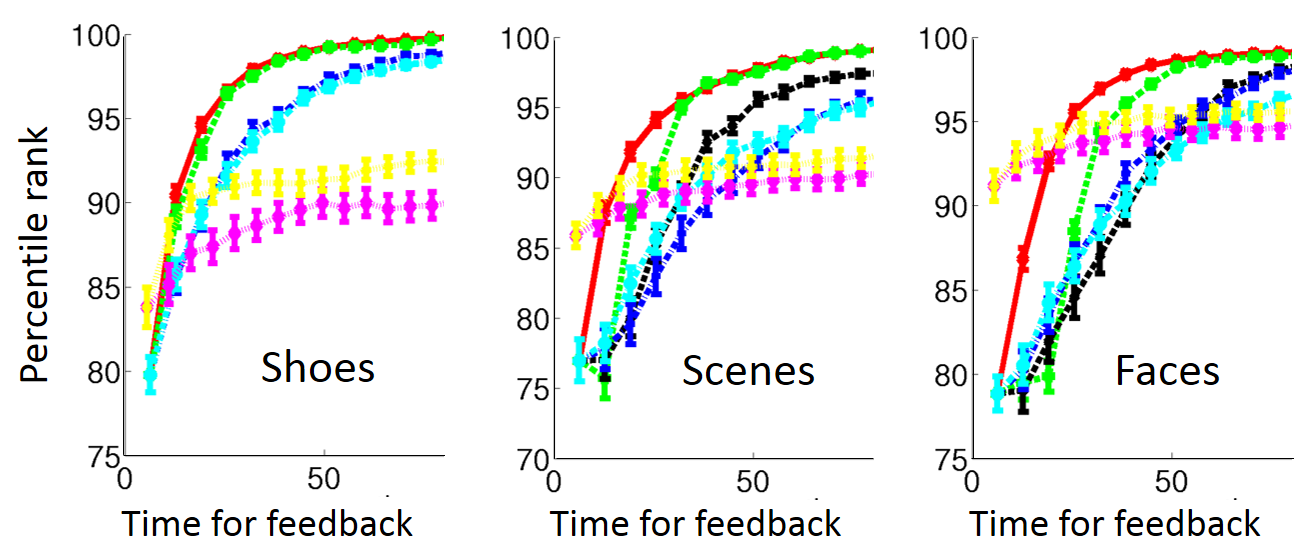

To allow more extensive testing, we perform some results where we simulate the user response. The two versions of our method significantly outperform tha baselines. Our full method (red) outperforms its passive variant (green), with an average percentile rank 7.6% better after only 3 iterations.

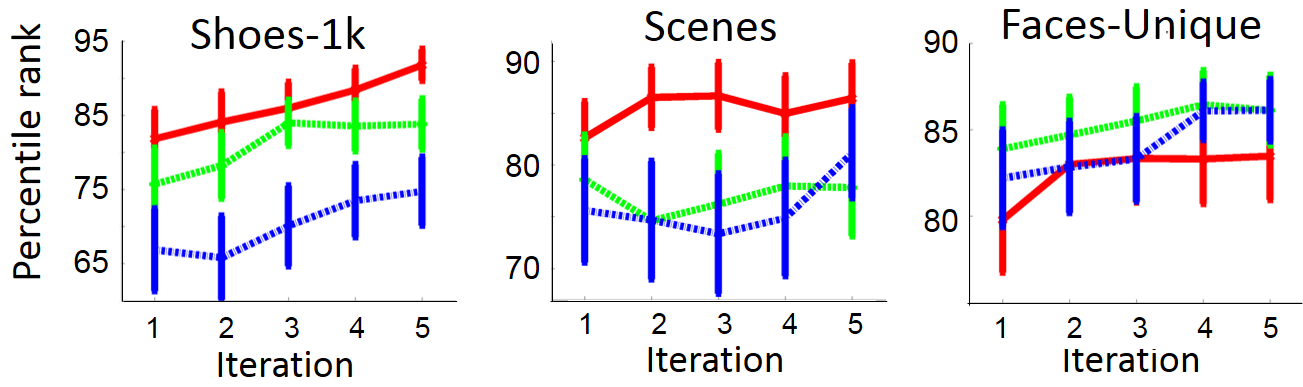

We also conduct iterative experiments with real users on Amazon Mechanical Turk, which confirms our observations. Our method achieves a 100-200 raw rank improvement on two datasets, and a negligible 0-10 raw rank loss on Faces:

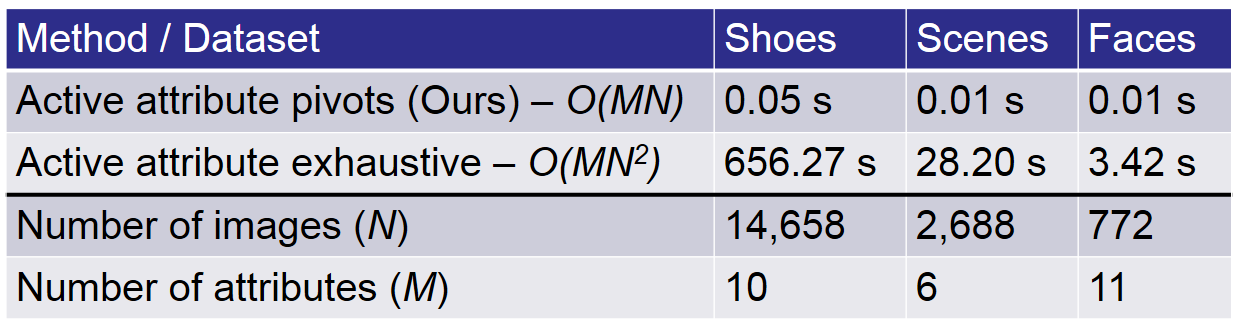

Furthermore, our method makes its selections orders of magnitude faster than the exhaustive method. This is because our method's complexity is only O(MN), while the exhaustive method has complexity O(MN2).